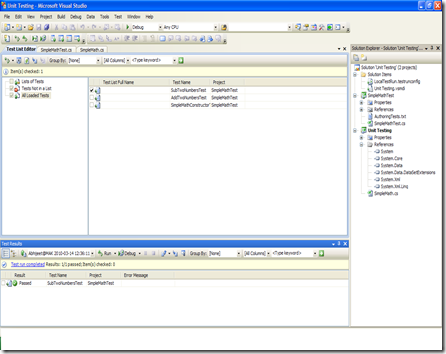

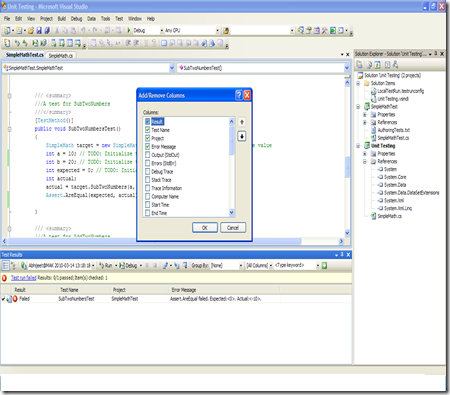

Moving ahead from unit testing aspects lets go through serialization and scenarios where we need to use this. Of course implementation wise we will see what is supported in .NET framework and its C# implementation.

What is Serialization??

In computer science term serialization usually refers to persisting state of object.

According to Wikipedia page

“In computer science, in the context of data storage and transmission, serialization is the process of converting a data structure or object into a sequence of bits so that it can be stored in a file or memory buffer, or transmitted across a network connection link to be "resurrected" later in the same or another computer environment.[1]

When the resulting series of bits is reread according to the serialization format, it can be used to create a semantically identical clone of the original object. For many complex objects, such as those that make extensive use of references, this process is not straightforward.

This process of serializing an object is also called deflating or marshalling an object.[2] The opposite operation, extracting a data structure from a series of bytes, is deserialization (which is also called inflating or unmarshalling). “

Ways of Serialization in .NET

Simplest way of serialization is to mark the class with the Serializable attribute.

[Serializable]

public class SaveScores {

public int Score = 0;

public int Wickets = 0;

public String PlayerName = String.Empty;

}

IFormatter FormatData = new BinaryFormatter();

Stream SaveStream = new FileStream("SaveScores.Txt",

FileMode.Create,

FileAccess.Write, FileShare.None);

formatter.Serialize(SaveStream, FormatData);

SaveStream.Close();

We can also have selective serialization. For this we need to use

NonSerialized attribute with the fields we do not want to consider

for serialization.

[Serializable]

public class SaveScores{

public int Score;

[NonSerialized] public int Wickets;

public String PlayerName=String.Empty;

}

Having seen this basic way of serializing a object, in coming posts

I will talk about other important aspects of Serialization which are

- Versioning.

- Custom Serialization.

- Concepts behind Serialization.

- Resources and Useful links for Serialization.

Till then .. Keep Reading, Keep Coding ..